Pixel Art's Past and Future

Many historical accumulations in civilizations have been fostered by the continual changes in the branches of art over the years. It is up to us, as observers of these art movements, to ensure that the acquired treasure is transferred to the future in the most effective manner possible. We will discuss the future of Pixel Art in this post by learning about Computer Art, which has emerged since the 1960s, when digitalization began.

Let's discuss about our primary subjects before we begin our post. First and foremost, we will give the approach a light touch by recognizing the history of the pixel, which is the building block of Pixel Art, from its inception. As a result, we'll be able to make a sound prediction concerning his work.

Etymology of Pixel

The word 'pixel' is formed from the use of 'pictures' with the abbreviation 'pics' and the 'el' complement found in words like 'voxel' and 'texel.' Because the smallest element in an image is considered a pixel, the 'hand' in the word pixel is the first two letters of the word element. In the headlines of the magazine 'Variety,' the way of reducing the word was shown by referring to the word 'image' . Furthermore, we can see that the phenomena known as pixel is expressed in various words prior to the publication of this magazine edition in 1934.

Instead of the word pixel, Alfred Dinsdale used mosaic of dots, mosaics containing selenium, and thousands of little squares in his essay in the Weirless World journal in 1927.

The term 'pixel' was used by Frederic C. Billingsley in 1965 to characterize the picture elements of photographs taken by space probes on JPL's space mission. Despite the fact that he claimed to have learnt the phrase from Keith E. McFarland, McFarland maintained that he had no idea where the 'pixel' combination came from and that the word 'pixel' was already in use at the time.

Let's look at the technology of pixels and their role in our lives now that we've briefly examined their historical context.

In simple words, a pixel is the smallest controllable element of an image portrayed on the screen in a digital display system. Color intensities vary between pixels. The colors red, green, and blue are used to produce this variation.

I'd want to focus your attention to the forms of the pixels regardless of the theme. Pixels and square shapes come to mind for most of us. However, this isn't exactly accurate; pixels on screens intended for 720p and 1080i video formats are arranged in square forms. The pixels on screens with resolutions other than this are rectangular.

The forms of the pixels on the screens are significant in the display component of pixel art, which we will discuss momentarily. The aspect ratio of the images displayed on the screen is affected by these pixels.

The Beginning of Pixel Art

The experience of pixel art begins shortly after Computer Art begins. Pixel art computers date back to a time when mechanization had a significant impact on human lives.

Computer art, which dates back to the 1960s, has evolved into an evolutionary structure as a result of digitalization and continues to evolve to this day. Desmond Paul Henry, an Englishman, was one of the first to experiment with computer art, building three sketching machines out of the bomb vision analog computers used by bombers during WWII.

The elaborate paintings of abstract, curved images that accompany Microsoft's Windows Media Player are reminiscent of Henry's machine-generated effects. Henry's Drawing Machine's drawings established a new branch of art and are the earliest examples of it. In addition, in 1962, these drawings were shown at the Reid Gallery in London.

We emphasized that Computer Art is, by its very nature, in a state of perpetual evolution. Types of art began to appear on digital machines rather than raw machinery by the end of the 1970s. Graphics shown on a computer screen was the term for art back then.

David Brandin, the head of the ACM (Association for Computing Machinery), to which our community belongs, was elected in the early 1980s. During his administration, he introduced people to computers and instilled in them a passion of technology.

Adele Goldberg and Robert Flegal defined 'Pixel Art' for the first time in a published piece. The term “pixel art” was first established in an essay published in the ACM on December 1, 1982 by Adele Goldberg and Robert Flegal.

Adobe was founded in 1982 and developed the Postscript language and digital typefaces, popularizing drawing, painting, and image processing software in the 1980s. Adobe Illustrator and Adobe Photoshop were introduced in the years that followed. Adobe Flash was released in 1996 as a popular collection of multimedia software for adding animation and interaction to web sites. Pixel artists became more popular in video games and music videos in the 2000s. For example, in 2006, Röyksopp released “Remind Me,” a work regarded as “surprising and mesmerizing” by the New York Times and fully illustrated with pixel art.

Pixel Art, like other schools of art, has created its own techniques, application systems, and mass, as well as the outstanding factors of its time, in this adventure that lasted until our recent history about 60 years ago and still continues. Pixel art was originally described as the way it was displayed on a computer screen, but it is now referred to as pixel art when offered as a product. During the intervening years, some works were created using software and fonts created by various corporations, allowing art to advance alongside technology.

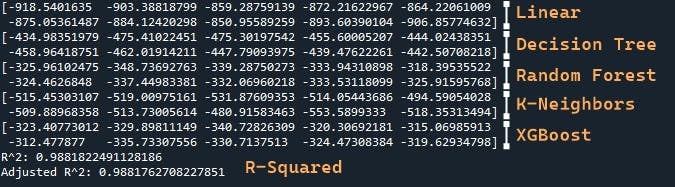

Artificial intelligence algorithms are the result of the evolution that has occurred over time. Artificial intelligence is utilized to support the majority of the approaches used in drawing apps. For example, providing relevant color samples in the color palette for a painting in progress demonstrates that these algorithms are at work.

Another type of application is for the computer to immediately make an image object. Despite its youth, it allows us to make a rather accurate prediction about how it will be used in the future.

Artificial intelligence and deep learning approaches are gradually showing up in this industry, thanks to today's technologies. Various versions of this art will soon be seen on most platforms, particularly VR and AR. If we want to make one more prediction, it is self-evident that it will serve the concept of the'metaverse' (multiverse), which has become popular in films and novels.