author: “Luke Rawlins”

date: “2021-08-30”

title: Cloud Storage and Sanity

description: “Cloud storage and privacy. An attempt to stay sane.”

url: /cloud-storage-privacy-and-sanity/

tags:

– Opinion

I have a lot of Apple devices in my house hold, my family and I have become accustomed to the ease of use, and deep integration of iCloud in iOS and MacOS devices. Apple’s recent announcement to add their child safety technology to iOS 15 and MacOS Monterey has been met with a lot of concern, not all of it unfounded. The EFF has written a few fairly compelling pieces about the dangers of this technology.

The flurry of news and opinions circulating through my normal reading lists has really started to tickle some of the more paranoid neurons in my tiny brain. I have to admit I go through cycles of digital privacy paranoia every so often, and not just for my own personal data – but also to make sure I’m not contributing to the problem of digital surveillance by stripping this site down to just the bare essentials – see my privacy policy for details.

Personally, I am of the opinion that you can’t expect absolute privacy from cloud providers. They need to protect their services in order to stay in business, and I sympathize with some of the concerns they have. For instance, I host a few sites for family members and have considered opening up hosting services for others, but any hosting on my end would come with a caveat that if you host egregious shit – I’m going to shut off your site, lock you out, and turn you over to the police. What would count as egregious shit would be completely up to me. Why would I expect anything different from Apple, Google, or Microsoft?

As far as iCloud goes, I’m actually less concerned about the photo upload hash comparison than I am about the iMessage component, but I’ll let you make your own judgments.

This post is really about the way’s I think about cloud storage when I’m not bogged down in anxiety over a foreign or domestic intelligence service aggregating my data. If that's a legitimate concern for you then please disregard anything I have to say here.

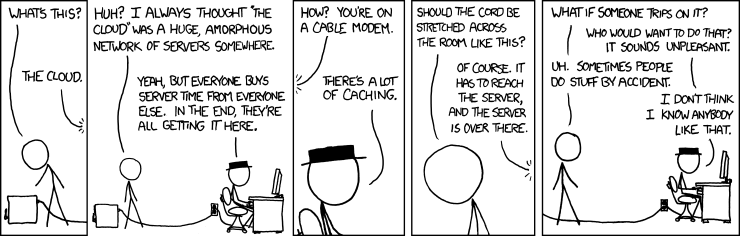

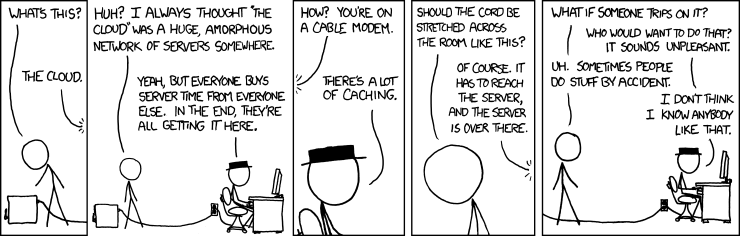

This post is my attempt to avoid the tin foil hat lifestyle

I think the best way for me to start would be to illustrate why I think self hosting is not the best option for most of us.

Self hosted is not an option for most individuals.

The cost alone of self hosted options makes it unreasonable for most people. Most cloud storage providers will give you 2TB of storage for around $10 a month.

To purchase a bare minimum NAS with comparable storage, you’re looking at $500 dollars on the very low end and if you replace it every 5 years (good luck getting 5 years out of a cheap unit) you’re spending a little over $8 a month (not including electricity) on something you have to maintain yourself.

Unless you’re tech savvy you won’t be able to access your files outside of your home network and even if you are tech savvy your storage and sync won’t be as reliable from a slow upload connection at home as it is from the cloud.

I can already hear someone screaming at me through the void “I have great upload speeds, with no problems!” Go ahead and add the cost of your fast upload speed to the cost of your NAS.

Self hosting is simply not an option for most people. It costs too much, requires too much know how, and is only more secure and private if you know what you’re doing, and have the money to spend on upkeep. A misconfiguration or software bug in your NAS, network, or endpoints could quickly kill any privacy gains.

About security on self hosted storage.

Privacy is not the be all end all of security. Can your NAS survive a fire, tornado, earthquake, or volcano? Not to mention a toddler knocking it off a shelf, a stray football thrown in the basement, or a random sudden disk failure. Data protection isn’t just protection from bad people – it’s protection from all sorts of things. Do you really want to risk losing all your family photo’s, because your teenage son and his friends were playing games too close to the rack that holds your NAS?

People tend to focus their security practices on confidentiality, while forgetting about integrity, and availability. Data on a home NAS is almost unquestionably more confidential than data in a public cloud, but data integrity due to mechanical failure, fire, or some other cause of loss is just as important for data security and I don’t think a $500 investment is going to get you anything even remotely comparable to what you get for $10 a month from OneDrive, iCloud, Dropbox, or Google Drive. Often you’re still going to need some offsite, probably cloud type, backup.

Not that you don’t need a cloud backup if you’re using a sync service like those I listed, you do, but if the point is to remove a public cloud vendor from your life you can’t do it for just the cost of a cheap NAS – you still have to trust someone along the way.

You really need to ask yourself if what you are gaining in confidentiality (if anything) is worth the trade off in availability, and integrity. I doubt most people will get any benefit from a home NAS – especially since many people will want to have access to it from the internet and will unknowingly expose themselves to other attack vectors by opening access into their home network.

How long can you really expect to self host?

I recently turned 40 – if I’m lucky I have another 40 or maybe 50 years to live. Of those 40 or 50 years probably at most 30 of those years will be in house large enough to justify the space for local storage, and after the age of 70 will I want to keep up with a home network? Maybe, but probably not – plus if I were to die along the way would the other members of my family know how to operate my self hosted storage systems? Probably not – and I’m betting most of you don’t have families who could or would be able to operate something like that either. As I get older I’d rather make it easier for family to get access to financial documents and photos, over the endless worry of government surveillance.

Assuming I’ve got another 600 months of life in me, at $10 a month for file and photo storage I’ll be spending around $6,000 on cloud storage from now till I die and I won’t have to find shelf space for it, or fiddle with fancy networking… I think that’s a not terrible deal.

Things I consider when looking at cloud storage

Assuming your are using one of the big cloud provides Apple, Google, Dropbox, Microsoft – their security and privacy practices are probably not that much different. I haven’t read all their privacy policies and this isn’t a sponsored post so do your own research on that end. I’m just telling you how I think about cloud storage in order to stay as sane as possible.

Integration, Interoperability, Data Ownership

Assuming relative equality of security, privacy, and capacity on the big cloud platforms these are the next big 3 considerations for me. If you are using a Linux desktop your storage options are going be far narrower than mine since at the moment I only use MacOS for my desktop.

Integration: between MacOS, and iOS iCloud is seamless, so that’s a check in the pro category for me in iCloud. But OneDrive has similar features at least for file storage, and is probably better if you are in a household with mixed Apple and Windows machines. The only thing that keeps me on iCloud is that I think the photo’s app is far superior to anything on Office 365 and photo sharing between family members is way too convenient for me to give up.

Data ownership: in a legal sense iCloud is where it should be – Apple doesn’t own your data. From a practical perspective it’s a little more complicated and that brings me to the next point which is interoperability – if you “own” the data but it’s difficult or impossible to move it to another platform do you really own it?

I have set my main desktop up to keep a local copy of everything – with a backup to a different service just in case I was ever locked out. In iCloud you “own” your data, but if you want to make sure you can move it around you should check your settings and make sure you have enough local storage to keep a local copy – I don’t think this is a uniquely Apple issue one of the weaknesses of cloud storage is the lack of portability.

On the Data Ownership point I actually think Google is ahead of Apple and Microsoft here for two reasons.

1. With Google drive I can actually request all my data (or parts of it) to be extracted from Google’s servers and downloaded to my computer. Apple offers something similar in iCloud.

2. Secondly, you can set up a trusted contact who can download data you’ve designated for them in the event that your account becomes inactive. For example if I were to die – my wife could still get access to my photo’s without having to know my Google account info.

1. On this point I suppose you can just share account info… but in some sense that’s a violation of terms of service and the control you get with the trusted contact means you can set more than one person and control the types of data they can get.

Interoperability: When I say “interoperability” I’m thinking of two specific and different things.

1. Interoperation between different cloud providers. i.e Portability

2. Compatibility across multiple operating systems.

On point 1 none of the cloud providers are all that eager to work with each other, so you’re unlikely to find many tools that make it easy to move your data without an intermediary. Surprisingly, in this case it looks like Apple does provide an exit route at least for photos to be migrated to Google using the data privacy tools they’ve created. As with Google drive you can also use this site to download a full copy of pretty much everything Apple know’s about you from iCloud.

At the time of writing (August 2021) I’m not sure if Microsoft or Dropbox offers anything similar.

Ransomware Protection

OneDrive and Dropbox both offer a system of versioning your files and will help you recover in the event of a ransomware event on your home computer. As far as I know Google and Apple don’t offer anything as robust for their cloud customers which is a shame and I hope it’s rolled out sooner rather than later. I’m guessing it won’t be too long till we see a large scale ransomware attack on MacOS. I don't worry much about it at the moment because I don't download torrents – but at some point that might not be good enough.

Recommendations

- Don’t listen to strangers on the internet.

- Note: I’m a stranger on the internet. 😆

- Don’t trust free services.

- Note: This entire site is free 😆

- Don’t download random crap from the internet.

- Note: you downloaded this site from the internet… whether or not it’s crap is left to the reader.

- Don’t try to replicate robust cloud storage systems with a cheap NAS. Unless you know what you are doing – and your claim to knowledge can be corroborated by at least one unbiased person.

- Note: I don’t run a home NAS…

- Try not to pull out the tin foil hat.

- I haven’t yet made a hat, but sometimes I think I should.

- Don’t eat yellow snow.

If you have ever installed an SSL certificate you know that it can be a tedious process. Let’s Encrypt makes this easy, just call the letsencrypt command from the terminal and point it at your domain. (Replace example.com with your own domain).

If you have ever installed an SSL certificate you know that it can be a tedious process. Let’s Encrypt makes this easy, just call the letsencrypt command from the terminal and point it at your domain. (Replace example.com with your own domain).

If you have a MySQL database working behind the scenes on your web site or app then creating and storing backup's of that database can be vitally important to the operation of your business operations. A MySQL or MariaDB database uses the

If you have a MySQL database working behind the scenes on your web site or app then creating and storing backup's of that database can be vitally important to the operation of your business operations. A MySQL or MariaDB database uses the